Over the past year I’ve been experimenting every so often with an audio-visual performance interface based off of Lissajous Displays and the 3D Oscilloscope series by Dan Iglesia. My version uses a glove-based motion capture interface that we have here at UCSB in the Allosphere, our 3-story mutlimedia environment. Here’s what they look like:

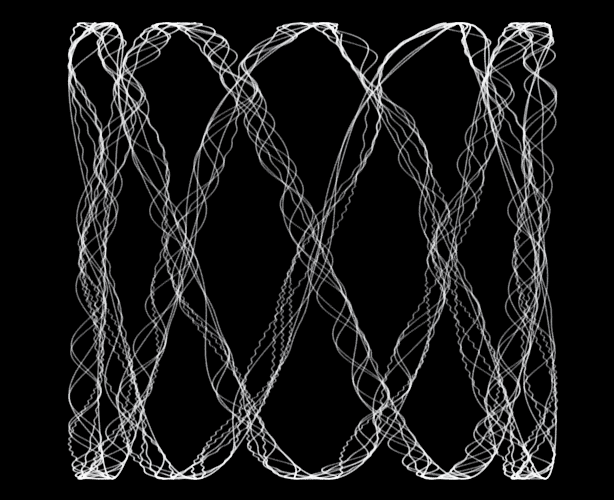

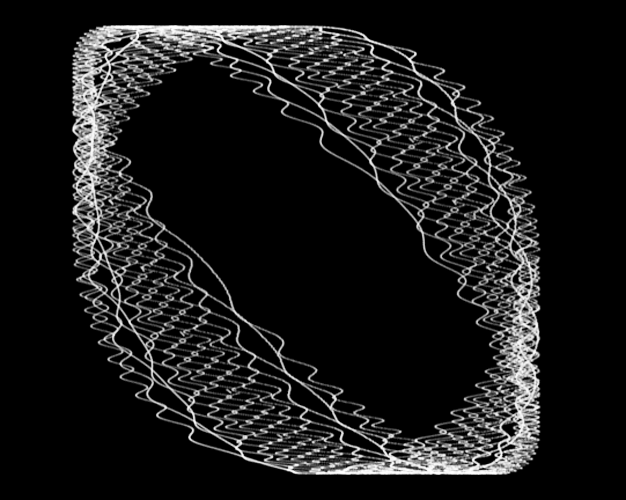

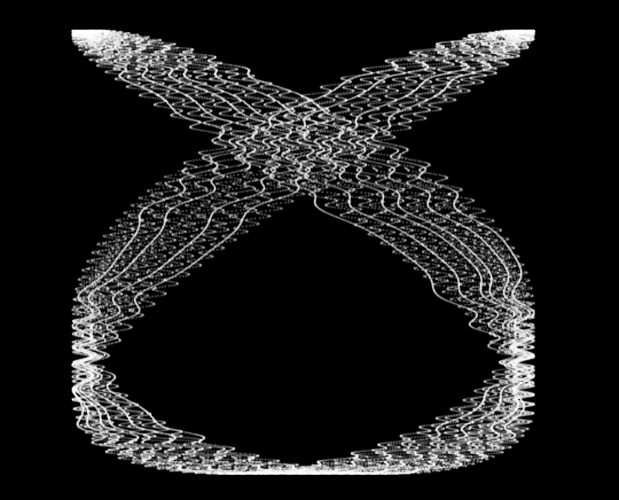

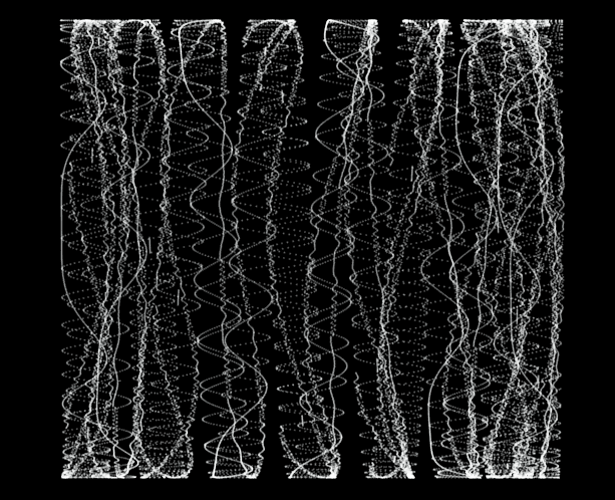

And here are are a few screenshots of the performances. Basically the performer puts on these gloves, which control the frequencies of multiple oscillators. These are then visualized as an oscilloscope display and the resulting pixels are translated into sound. A skilled performer can access many interesting shapes like the ones below tuning the oscillators in harmonic ratios.

Music

Music